In machine learning, the ultimate goal is not to perform well on training data — it is to perform well on unseen data.

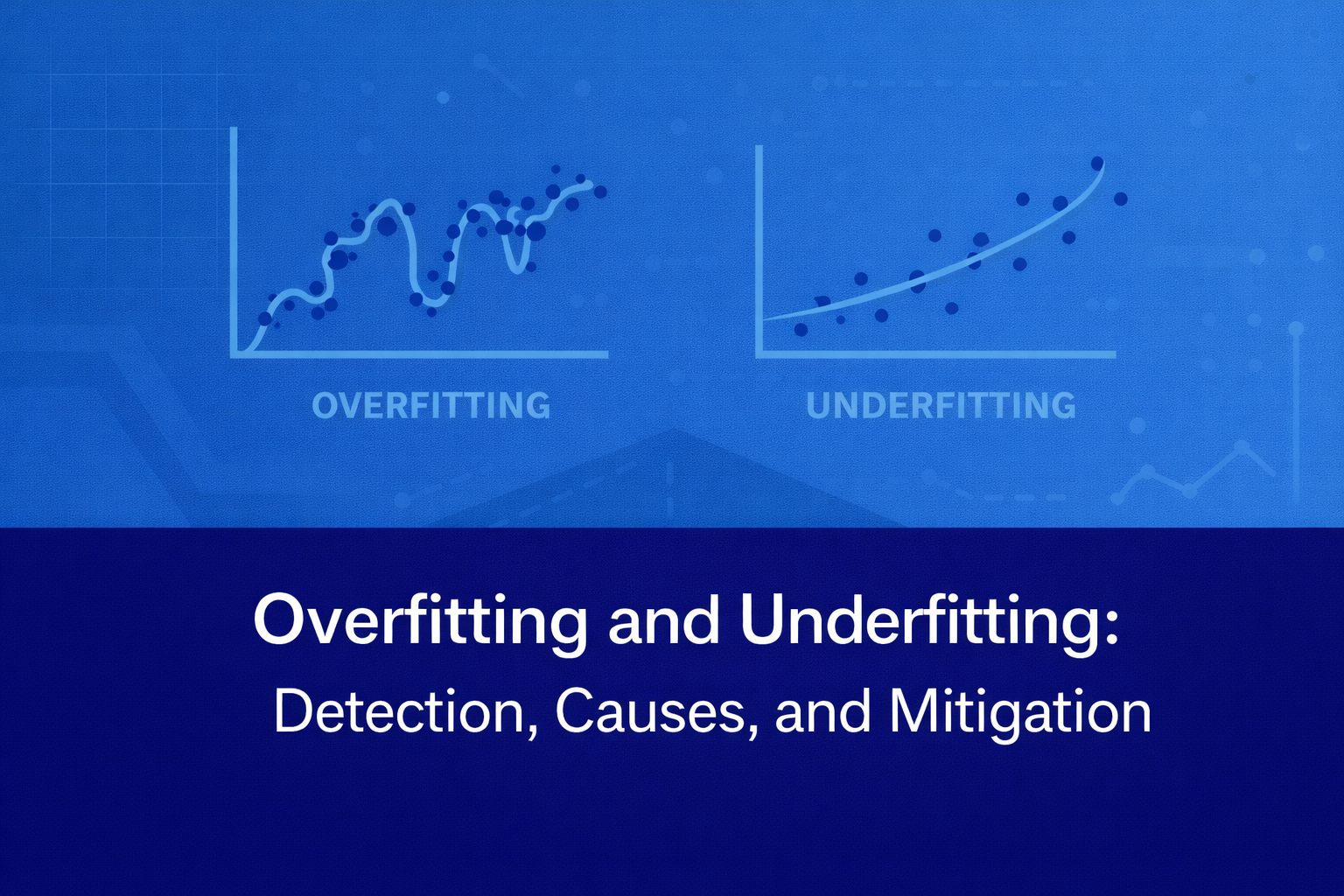

Overfitting and underfitting are two failure modes that prevent this from happening.

When interviewers ask about these concepts, they are evaluating whether you understand:

- The relationship between model complexity and generalization

- How to diagnose training behavior

- How to fix models systematically rather than randomly

1. What is Underfitting?

Definition

Underfitting occurs when a model is too simple to capture the underlying structure of the data.

It fails to learn important patterns.

The model has high bias and low variance.

Symptoms of Underfitting

- High training error

- High validation error

- Similar performance on both datasets

- Poor predictive performance overall

If your model performs badly even on training data, it is underfitting.

Why Underfitting Happens

Common causes:

- Model too simple (e.g., linear model for nonlinear data)

- Insufficient features

- Over-regularization

- Too few training iterations

- Poor feature engineering

Example

Imagine modeling house prices using only square footage, ignoring:

- Location

- Age of property

- Amenities

Even with lots of data, the model cannot learn complex relationships.

That’s underfitting.

Interview Insight

Strong candidates say:

“If both training and validation errors are high and similar, the model likely lacks representational capacity.”

That signals maturity.

2. What is Overfitting?

Definition

Overfitting occurs when a model learns not only the true patterns but also the noise in the training data.

It performs well on training data but poorly on new data.

The model has low bias and high variance.

Symptoms of Overfitting

- Very low training error

- High validation/test error

- Large gap between training and validation performance

This is the classic “memorization problem.”

Why Overfitting Happens

Common causes:

- Model too complex

- Small dataset

- Too many features

- No regularization

- Excessive training

- Data leakage

Example

A deep neural network trained on 500 data points:

- 100% training accuracy

- 65% validation accuracy

The model has memorized the training examples.

Interview Insight

Strong candidates add:

“Overfitting often indicates that the hypothesis space is too large relative to the dataset size.”

That’s a production-level explanation.

3. The Training vs Validation Curve Perspective

Interviewers love when candidates describe this intuitively.

Underfitting Pattern

Training Error: High

Validation Error: High

Gap: Small

Overfitting Pattern

Training Error: Low

Validation Error: High

Gap: Large

Good Fit Pattern

Training Error: Low

Validation Error: Low

Gap: Small

Being able to explain this verbally shows deep intuition.

4. Why This Matters in Production

Overfitting and underfitting are not academic issues.

They directly impact:

- Customer experience

- Fraud detection reliability

- Medical diagnosis accuracy

- Revenue optimization

- Operational stability

An overfitted fraud model:

- Generates too many false alarms

- Creates alert fatigue

An underfitted medical model:

- Misses critical diagnoses

Both are dangerous — for different reasons.

5. How to Fix Underfitting

If the model is underfitting:

Increase Model Capacity

- Use nonlinear models

- Add polynomial terms

- Use deeper architectures

Improve Features

- Feature engineering

- Add domain knowledge features

Reduce Regularization

- Lower L1/L2 penalties

- Reduce dropout rate

Train Longer

- More iterations (if prematurely stopped)

6. How to Fix Overfitting

If the model is overfitting:

Add More Data

Most powerful solution.

Regularization

- L1 / L2 penalties

- Dropout (deep learning)

- Weight decay

Reduce Model Complexity

- Shallower tree

- Smaller network

- Fewer features

Cross-Validation

Ensure robust performance estimation.

Early Stopping

Stop training when validation error increases.

Data Augmentation

Especially in computer vision.

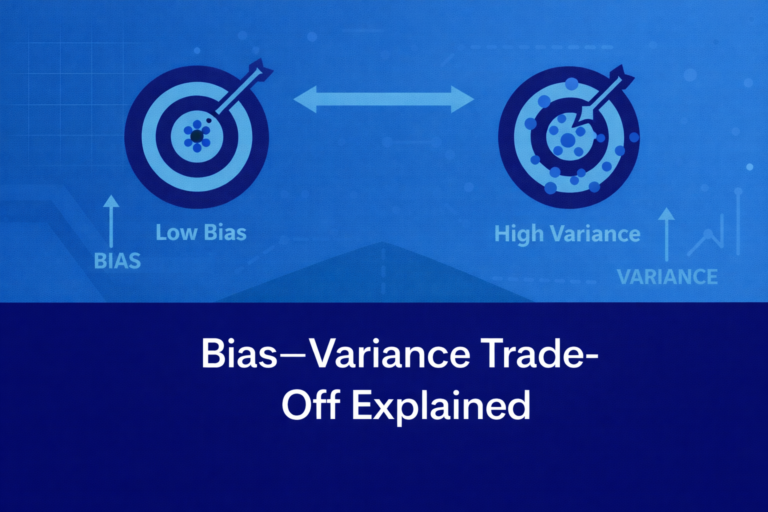

7. Relationship to Bias–Variance

Underfitting = High Bias

Overfitting = High Variance

But here’s the nuance:

- Bias and variance describe error components

- Overfitting and underfitting describe observed behavior

Strong candidates understand both layers.

8. Common Interview Mistakes

❌ Saying “overfitting means 100% training accuracy”

(Not always true.)

❌ Confusing data leakage with overfitting

(Data leakage is worse.)

❌ Suggesting “always use deep learning”

(Complexity increases overfitting risk.)

❌ Ignoring regularization strategies

9. Real Interview Scenario

Interviewer:

“Your model has 98% training accuracy but 78% validation accuracy. What would you do?”

Strong structured response:

- Confirm no data leakage

- Check class imbalance

- Add regularization

- Simplify model

- Try cross-validation

- Increase dataset size if possible

That systematic reasoning is what interviewers reward.

10. Advanced Perspective: Overfitting Is Not Always Bad

In large models (e.g., deep networks), models can:

- Perfectly fit training data

- Still generalize well

This challenges classical intuition.

Modern ML shows that:

- Overparameterized models can generalize under certain regimes

- Implicit regularization plays a role

Mentioning this in senior interviews shows depth.

Final Thought: Generalization Is the Goal

Overfitting and underfitting are symptoms of imbalance between:

- Model complexity

- Data availability

- Regularization strength

The goal is not:

- Lowest training error

- Most complex model

The goal is:

Stable, reliable performance on unseen data.

If you can explain:

- What they are

- How to diagnose them

- How to fix them

- Why they matter operationally

You demonstrate readiness to build AI systems that survive beyond demos.