Artificial Intelligence is a vast and evolving field. Frameworks change. Libraries evolve. Architectures get replaced.

But certain core principles remain constant.

If you strip away hype, tooling, and buzzwords, every strong AI practitioner understands a set of foundational concepts that govern how intelligent systems are built, evaluated, and deployed.

Interviewers test these fundamentals relentlessly—not because they want textbook definitions, but because these concepts shape engineering judgment.

Here are the ten essential AI concepts every practitioner must deeply understand.

1. Generalization

The central goal of AI is not memorization—it is generalization.

A model must perform well on unseen data, not just training data.

Key understanding:

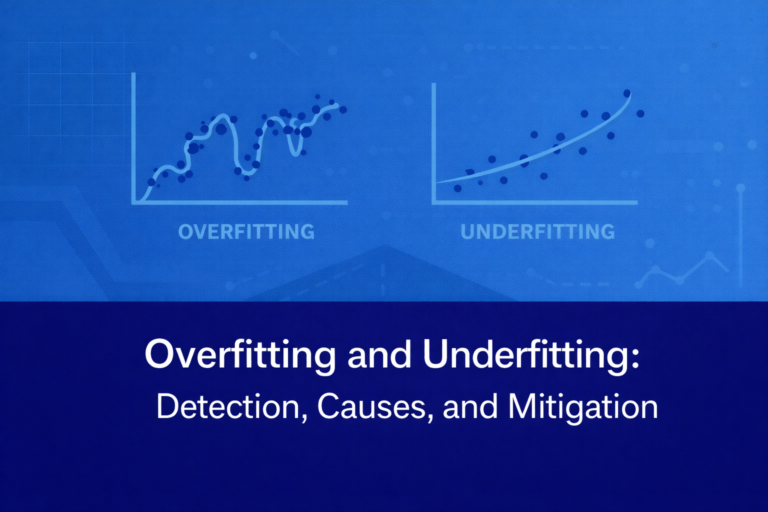

- Training error vs validation error

- Overfitting vs underfitting

- Bias–variance trade-off

If you don’t understand generalization, you don’t understand ML.

2. Data as the Foundation

AI systems are only as good as their data.

Practitioners must understand:

- Data collection and labeling quality

- Class imbalance

- Sampling bias

- Data leakage

- Drift over time

Strong engineers think:

“Before choosing a model, I need to understand the data distribution.”

Models are downstream decisions. Data is upstream truth.

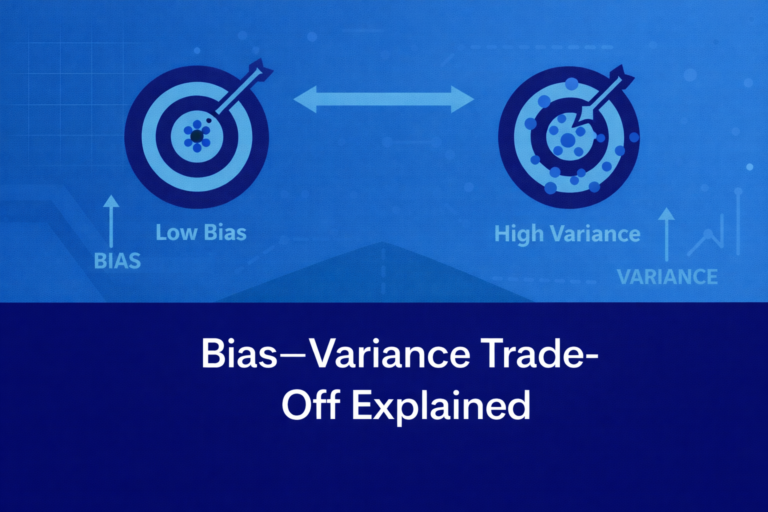

3. Bias–Variance Trade-Off

Every model exists on a spectrum between:

- Simplicity (high bias)

- Complexity (high variance)

Model complexity must align with:

- Dataset size

- Feature richness

- Noise level

This concept governs:

- Regularization

- Architecture selection

- Ensemble methods

Without this understanding, tuning becomes guesswork.

4. Optimization and Gradient Descent

Most modern AI systems are trained through optimization.

Understanding:

- Loss functions

- Gradient descent

- Learning rate

- Convergence behavior

is non-negotiable.

Practitioners must know:

- Why optimization may fail

- How local minima or saddle points affect training

- Why learning rate tuning matters

Optimization is the engine of learning.

5. Evaluation Metrics and Alignment

Metrics determine system behavior.

You must understand:

- Accuracy vs precision vs recall

- F1-score

- ROC-AUC

- Business-aligned metrics

AI systems fail when metrics do not reflect real-world cost.

A fraud model optimized only for accuracy can be operationally disastrous.

Metrics encode priorities.

6. Feature Representation

Raw data is rarely ready for modeling.

Feature engineering and representation learning determine:

- Model performance

- Interpretability

- Stability

Understanding:

- Encoding categorical data

- Scaling numerical features

- Embeddings

- Dimensionality reduction

is foundational.

In deep learning, representation is learned automatically—but the principle remains the same.

7. Model Complexity and Capacity

Every model has a representational capacity.

Too little → underfitting

Too much → overfitting

Understanding capacity means understanding:

- Hypothesis space

- Degrees of freedom

- Parameter count

This influences:

- Architecture choice

- Regularization need

- Dataset requirements

Complex models demand stronger controls.

8. Regularization

Regularization is how we control complexity.

Techniques include:

- L1 / L2 penalties

- Dropout

- Early stopping

- Data augmentation

But conceptually, regularization means:

Constraining the model to prefer simpler, more stable solutions.

Without regularization, models chase noise.

9. Interpretability and Explainability

Modern AI systems increasingly impact high-stakes domains.

Practitioners must understand:

- Feature importance

- Model transparency

- SHAP and LIME

- Trade-offs between performance and interpretability

Deep models may perform better—but can you explain them?

In regulated domains, explainability is not optional.

10. Deployment and Lifecycle Thinking

AI is not complete at training.

Practitioners must understand:

- Model serving

- Monitoring for drift

- Retraining strategies

- A/B testing

- Versioning

Many candidates think AI ends at training accuracy.

Senior practitioners think:

“How will this model behave six months after deployment?”

Lifecycle thinking separates prototypes from production systems.

The Meta Concept: Trade-Off Awareness

Across all ten concepts lies a deeper principle:

AI is a field of trade-offs.

- Accuracy vs interpretability

- Speed vs complexity

- Automation vs control

- Precision vs recall

- Innovation vs reliability

Strong practitioners make decisions consciously—not reactively.

How Interviewers Evaluate These Concepts

Interviewers rarely ask:

“List ten AI concepts.”

Instead, they probe:

- Why did you choose this model?

- How would you detect drift?

- Why not use deep learning?

- How do you prevent overfitting?

Every one of these questions maps back to the fundamentals.

Candidates who understand the foundations can navigate any advanced topic confidently.

Final Thought: Foundations Outlast Frameworks

Tools will evolve.

Frameworks will change.

Architectures will improve.

But these ten concepts form the intellectual backbone of AI engineering.

If you master them, you can:

- Adapt to new technologies

- Justify architectural decisions

- Handle production challenges

- Answer interview questions with clarity

AI literacy is not about memorizing models.

It is about understanding principles.

And principles scale.